Auto Ingest query logs from Hydrolix

In order to index hydrolix query logs we need to enable autoingest from S3 on query heads logs.

We need to update the tunables to get AWS S3 notification from your bucket:

hdxctl tunables get hdxcli-$CLIENTID > tunables.toml

Add your bucket into the bucket_allowlist:

bucket_allowlist = [ "bucket1", "bucket2", "hdxcli-$CLIENTID",]

Then update the tunables into the cluster:

hdxctl tunables set hdxcli-$CLIENTID tunables.toml

Finally update your Client ID:

hdxctl update hdxcli-$CLIENTID

Now on AWS create a new SQS queue which will be used to deliver the s3 notification of new logs files.

hdxcli-$CLIENTID-auto-query-head with the following policy:

{

"Version": "2008-10-17",

"Statement": [

{

"Effect": "Allow",

"Principal": {

"Service": "s3.amazonaws.com"

},

"Action": "SQS:SendMessage",

"Resource": "arn:aws:sqs:us-east-2:209166775408:hdxcli-$CLIENTID-auto-query-head",

"Condition": {

"ArnLike": {

"aws:SourceArn": [

"arn:aws:s3:*:*:hdxcli-$CLIENTID"

]

}

}

}

]

}

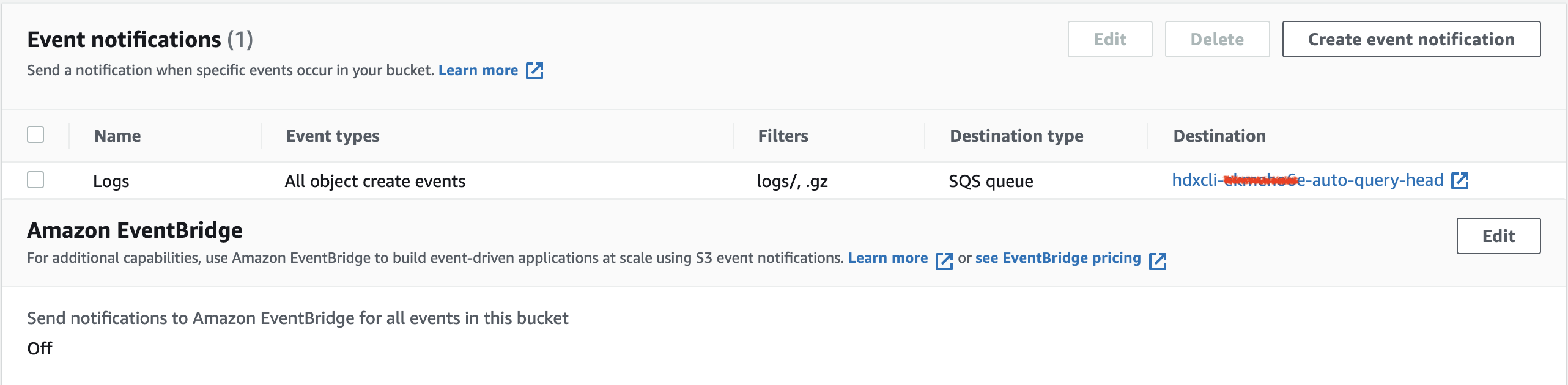

Now finally setup notification from s3 into that new queue, go to the s3 bucket hdxcli-$CLIENTID in the Properties click on Create event notification.

Prefix is logs/

suffix is .gz

Select All object create events

And finally select the SQS queue created before.

You should have something similar to this:

Now you can setup a project and table to get those logs automatically using VSCode:

### Global variable to replace with your own needs

@host = host

@projectname = hydrologs

@tablename = query_head

@transformname = query_head_transform

@username = "[email protected]"

@password = "yyyyyyyy"

@clientid = hdxcli-xxxxx

@base_url = https://{{host}}.hydrolix.live/config/v1/

### Login authentication get access token and UUID Org variable

# @name login

POST {{base_url}}login

Content-Type: application/json

{

"username": {{username}},

"password": {{password}}

}

### Store, parse the login response body to store the access token and organization id

@access_token = {{login.response.body.auth_token.access_token}}

@org_id = {{login.response.body.orgs[0].uuid}}

### Create a new project using the variable {{projectname}}

# @name new_project

POST {{base_url}}orgs/{{org_id}}/projects/

Authorization: Bearer {{access_token}}

Content-Type: application/json

{

"name": "{{projectname}}",

"org": "{{org_id}}"

}

### Store, parse project ID from response

@projectid = {{new_project.response.body.uuid}}

### Create a new table named {{tablename}} in the {{projectname}}

# @name new_table

POST {{base_url}}orgs/{{org_id}}/projects/{{projectid}}/tables/

Authorization: Bearer {{access_token}}

Content-Type: application/json

{

"name": "{{tablename}}",

"project": "{{projectid}}",

"description": "Journald Logs from Vector",

"settings": {

"merge": {

"enabled": true

},

"autoingest": {

"enabled": true,

"source": "sqs://{{clientid}}-auto-query-head",

"pattern": "^s3://{{clientid}}/logs/.*/head-i.*log.gz"

}

}

}

### Store, parse table ID from response

@tableid = {{new_table.response.body.uuid}}

#### Creates a a transform for the json format and upload to our table

# @name new_transform

POST {{base_url}}orgs/{{org_id}}/projects/{{projectid}}/tables/{{tableid}}/transforms/

Authorization: Bearer {{access_token}}

Content-Type: application/json

{

"name": "{{transformname}}",

"type": "json",

"table": "{{tableid}}",

"settings": {

"is_default": true,

"output_columns": [

{

"name": "timestamp",

"datatype": {

"primary": true,

"resolution": "s",

"format": "2006-01-02 15:04:05.000 +00:00",

"type": "datetime"

}

},

{

"name": "message",

"datatype": {

"index": true,

"type": "string"

}

},

{

"name": "level",

"datatype": {

"index": true,

"type": "string"

}

},

{

"name": "component",

"datatype": {

"index": true,

"type": "string"

}

},

{

"name": "logs_file",

"datatype": {

"type": "string",

"index": true,

"source": {

"from_automatic_value": "input_filename"

}

}

}

],

"compression": "gzip",

"format_details": {

"flattening": {

"active": false,

"map_flattening_strategy": null,

"slice_flattening_strategy": null

}

}

}

}

Updated 5 months ago