Migrate to External PostgreSQL

Setting up PostgreSQL in high availability is important for ensuring that the Hydrolix cluster remains available and performs well in a production environment. It provides enhanced uptime, performance, scalability, and fault tolerance, which are all essential for Hydrolix.

For testing/dev purpose you can use the built-in Hydrolix PostgreSQL instance. For production applications, we recommend migrating this PostgreSQL into a high availability external instance.

Step by step migration in GKE

This step by step migration requires access to the current cluster and setting up a new database on google cloud using Cloud SQL.

Get the ROOT_DB_PASSWORD from k8s secret:

kubectl get secret general -o jsonpath='{.data.ROOT_DB_PASSWORD}' | base64 -d ; echo

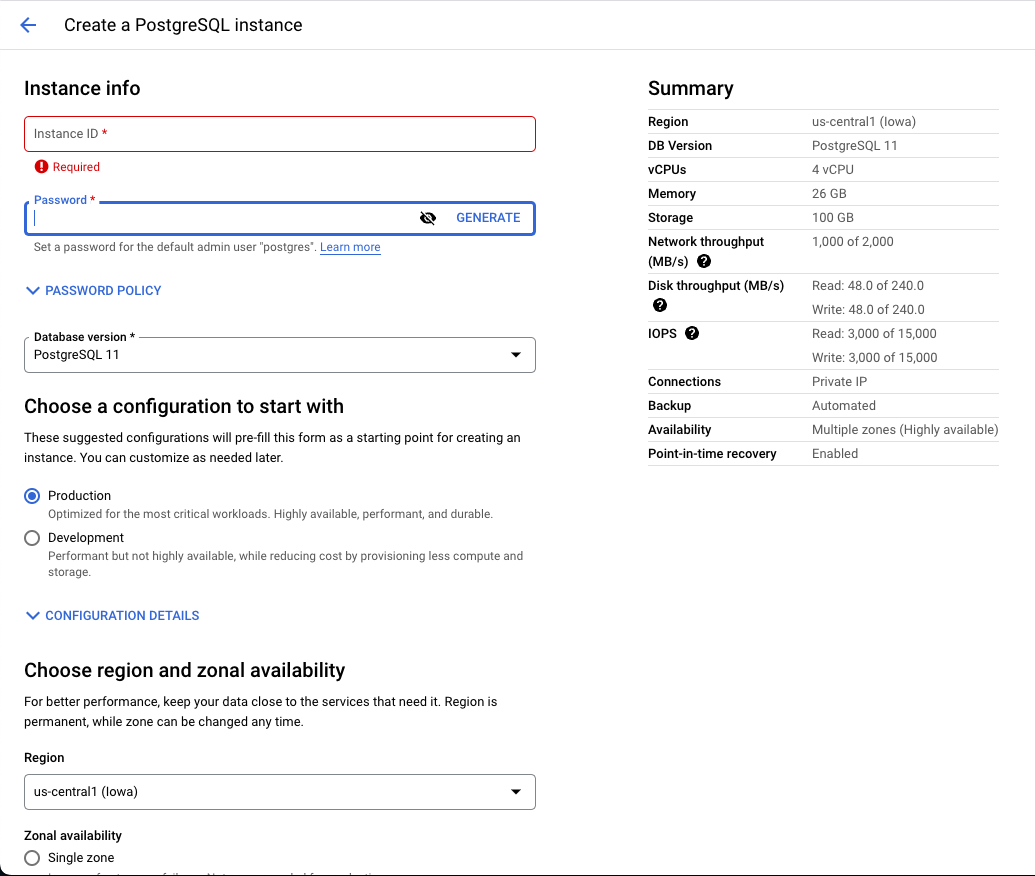

Create a new postgres database:

- Verify that the new database is on the same region as the k8s cluster.

- Create a new postgres database using version 11

- Use the password previously retrieved from the k8s cluster

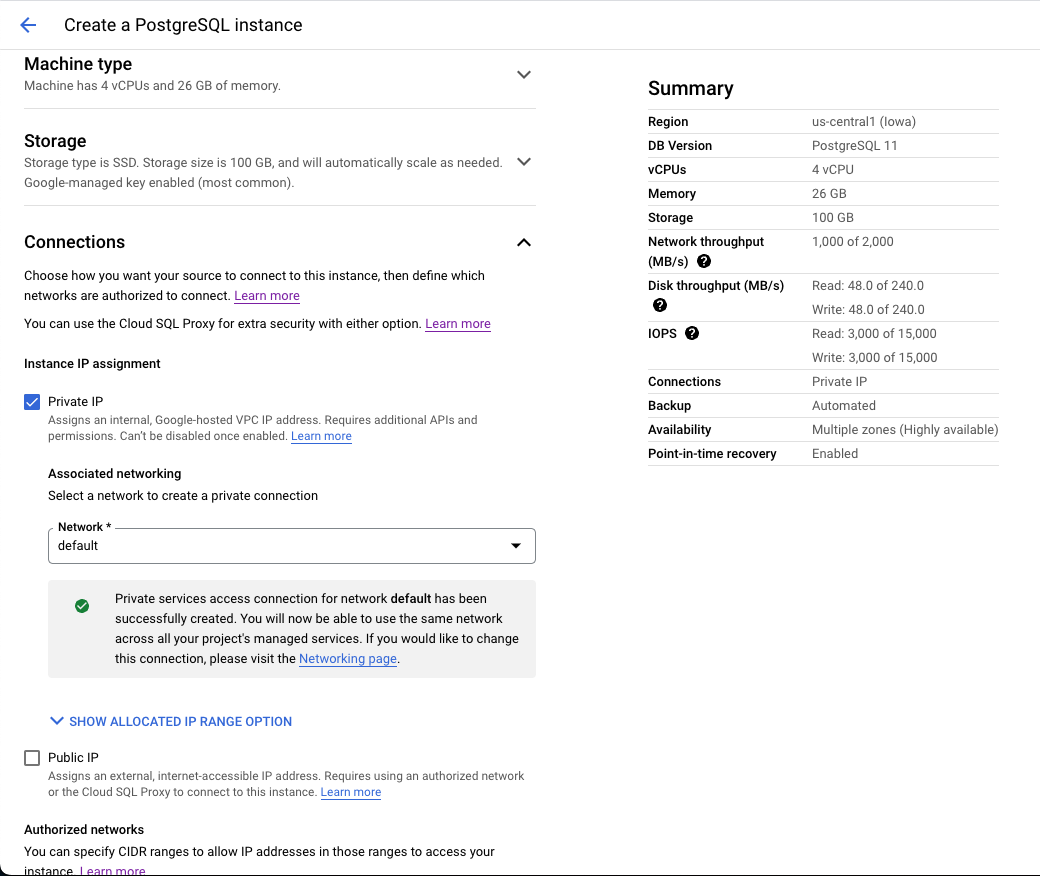

- Click on Customize your Instance and then Connections

- Disable Public IP and enable Private IP.

- Select the proper network and ensure that the VPC can connect to your K8s cluster.

While your External Postgres is being created

- Log into your existing k8s Postgres

kubectl exec -it postgres-0 /bin/bash

- Install vim:

apt-get update

apt-get -y install vim

- Export all the roles from the database:

pg_dumpall -U turbine --roles-only > /pv/data/roles.sql

- The file includes too many roles and ALTER command which aren't compatible with Cloud SQL, we need to modify it from something like that:

--

-- PostgreSQL database cluster dump

--

SET default_transaction_read_only = off;

SET client_encoding = 'UTF8';

SET standard_conforming_strings = on;

--

-- Roles

--

CREATE ROLE config_api;

ALTER ROLE config_api WITH NOSUPERUSER INHERIT NOCREATEROLE NOCREATEDB LOGIN NOREPLICATION NOBYPASSRLS PASSWORD 'md527cfa08393d630740c9b73f83xxxxxxx';

CREATE ROLE grafana;

ALTER ROLE grafana WITH NOSUPERUSER INHERIT NOCREATEROLE NOCREATEDB LOGIN NOREPLICATION NOBYPASSRLS PASSWORD 'md57f2d9019e5ef927b0e52853d7yyyyyyy';

CREATE ROLE keycloak;

ALTER ROLE keycloak WITH NOSUPERUSER INHERIT NOCREATEROLE NOCREATEDB LOGIN NOREPLICATION NOBYPASSRLS PASSWORD 'md5ba91f2107aac1f213e1d674d7zzzzzzz';

CREATE ROLE query_api;

ALTER ROLE query_api WITH NOSUPERUSER INHERIT NOCREATEROLE NOCREATEDB LOGIN NOREPLICATION NOBYPASSRLS PASSWORD 'md553161b150a3d04d0cef1f05b6aaaaaaa';

CREATE ROLE superset;

ALTER ROLE superset WITH NOSUPERUSER INHERIT NOCREATEROLE NOCREATEDB LOGIN NOREPLICATION NOBYPASSRLS PASSWORD 'md51dd754c88323aeb745cc3ae74bbbbbbb';

CREATE ROLE superset1;

ALTER ROLE superset1 WITH NOSUPERUSER INHERIT NOCREATEROLE NOCREATEDB LOGIN NOREPLICATION NOBYPASSRLS PASSWORD 'md5050b4df6f9f2ae09b4736a880ccccccc';

CREATE ROLE turbine;

ALTER ROLE turbine WITH SUPERUSER INHERIT CREATEROLE CREATEDB LOGIN REPLICATION BYPASSRLS PASSWORD 'md571302861afa3b6eb33d7d9230ddddddd';

--

-- Role memberships

--

GRANT config_api TO turbine GRANTED BY turbine;

GRANT grafana TO turbine GRANTED BY turbine;

GRANT keycloak TO turbine GRANTED BY turbine;

GRANT query_api TO turbine GRANTED BY turbine;

GRANT superset TO turbine GRANTED BY turbine;

GRANT superset1 TO turbine GRANTED BY turbine;

--

-- PostgreSQL database cluster dump complete

--

- To something like that:

--

-- PostgreSQL database cluster dump

--

SET default_transaction_read_only = off;

SET client_encoding = 'UTF8';

SET standard_conforming_strings = on;

--

-- Roles

--

CREATE ROLE config_api WITH NOSUPERUSER INHERIT NOCREATEROLE NOCREATEDB LOGIN NOREPLICATION NOBYPASSRLS PASSWORD 'md527cfa08393d630740c9b73f83xxxxxxx';

CREATE ROLE keycloak WITH NOSUPERUSER INHERIT NOCREATEROLE NOCREATEDB LOGIN NOREPLICATION NOBYPASSRLS PASSWORD 'md5ba91f2107aac1f213e1d674d7zzzzzzz';

CREATE ROLE query_api WITH NOSUPERUSER INHERIT NOCREATEROLE NOCREATEDB LOGIN NOREPLICATION NOBYPASSRLS PASSWORD 'md553161b150a3d04d0cef1f05b6aaaaaaa';

--

-- Role memberships

--

GRANT config_api TO postgres GRANTED BY postgres;

GRANT keycloak TO postgres GRANTED BY postgres;

GRANT query_api TO postgres GRANTED BY postgres;

--

-- PostgreSQL database cluster dump complete

--

So to summarize DELETE the role:

- grafana

- superset

- superset1

- turbine

Delete the line which are just creating the role like:

CREATE ROLE config_api;

Replace the ALTER command with CREATE.

And finally change the GRANT command from turbine to postgres for both databases and users.

After your cluster is created

- Get the IP address of your external postgres from google

- Try to connect to your cluster via psql, use the password retrieved earlier:

psql -h $IP_FROM_GOOGLE_CLOUD -U postgres

- If the connection works you can then import the new role:

psql -h $IP_FROM_GOOGLE_CLOUD -U postgres < /pv/data/roles.sql

Scale down services using postgres

If the connection and the role import worked properly we have to scale down all the services which are leveraging postgres:

- stream-peer

- merge-peer

- kafka-peer

- kinesis-peer

- batch-peer

- turbine-api

- keycloak

- intake-api

- decay

- vacuum

- merge-head

Within your cluster YAML/UI you should also ensure to set all your Hydrologs, Merge, Summary and other pools to 0.

batch-head:

replicas: 0

batch-peer:

replicas: 0

decay:

replicas: 0

intake-api:

replicas: 0

kafka-peer:

replicas: 0

kinesis-peer:

replicas: 0

keycloak:

replicas: 0

merge-head:

replicas: 0

merge-peer:

replicas: 0

turbine-api:

replicas: 0

vacuum:

replicas: 0

Query should continue to work and as stream-head and redpanda are still running we will buffer all the streaming data until the migration is done.

Now we need to wait.

Make sure to wait for all the components and pools you have set to 0 have been turned off. We are trying to ensure changes do not occur to the

catalogat this time.

Once all services using postgres are down

Once all the services leveraging are down, we can start doing the dump of the database:

- Start with keycloak:

pg_dump --create --clean -U turbine keycloak > /pv/data/keycloak.sql

- Then config_api:

pg_dump --create --clean -U turbine config_api > /pv/data/config_api.sql

- And finally catalog:

pg_dump --create --clean -U turbine catalog > /pv/data/catalog.sql

Importing the data to your new database

- Start with keycloak:

psql -h $IP_FROM_GOOGLE_CLOUD -U postgres < /pv/data/keycloak.sql

- Then config_api:

psql -h $IP_FROM_GOOGLE_CLOUD -U postgres < /pv/data/config_api.sql

- And finally catalog:

psql -h $IP_FROM_GOOGLE_CLOUD -U postgres < /pv/data/catalog.sql

Setting up the role for each database

If all the import are successful you can log into your external postgres and set database privileges:

psql -h $IP_FROM_GOOGLE_CLOUD -U postgres

grant all privileges on database keycloak to keycloak;

grant all privileges on database config_api to config_api;

grant all privileges on database catalog to query_api;

Update your Hydrolix config to use the new database

Last step is to update the Kubernetes configuration to point to the new postgres and start again all the different services, and disable your current postgres:

spec:

catalog_db_admin_db: postgres

catalog_db_admin_user: postgres

catalog_db_host: $IP_FROM_GOOGLE_CLOUD

scale:

postgres:

replicas: 0

Once the configuration is applied all the services have restarted you need to restart query-head and peer to leverage the new DB:

kubectl rollout restart deployment query-head

Updated 5 months ago